As Mark Twain wrote, "Facts are stubborn things, but statistics are pliable."

The statistics shared by the Chief of Police in a March 2024 community article made for grim reading. In 2023, calls for service were up 38%, a 34% increase in reported crimes, and 35% more charges were issued. It painted a dark picture of the state of the community compared to years past.

The statistics presented had one problem: They were so different from what community members experienced that a deeper dive was needed.

Over the next five articles, we will share how we approached this analysis and enabled this community to ask better questions, provide better insight, and dig a little deeper to share an accurate picture about policing and safety in the community.

Step 1: Understanding the data

- What first-pass deductions can we gather?

- What data do we have, what can we get, and how will we correlate?

- Are we using a common language to describe the data being analyzed?

Step 2: Building the data set

Step 3: Quality control

Step 4: Presentation of insight

Step 5: Lessons learned.

While the data is unique to this community, analyzing and gaining insight from data applies to both the public and private sectors, large and small. Our BI helpdesk is a great place to start if you want to move beyond text and gain insight from your data. If you have a larger project involving data transformation and unlocking the full power of your data, connect here... we would love to start a conversation.

Step 1: Understanding the data

"Statistics. The science that says if I ate a whole chicken and you didn't eat any, then each of us ate half a chicken." Dino “Pitigrilli” Segrè

When we first started analyzing the summary data, a couple of elements caused me to wonder how the information was grouped: traffic stops were down from 5 years prior but not dramatically and not drawn attention to in the narrative, arrests were up - cited and released were down - together there was not a major difference, there was a 3 to 1 ratio of charges to arrests, but most significantly was the inclusion of 2020 the year of COVID in the averages.

2020 was an anomaly in data points. How to treat an anomaly is important because it can impact statistical calculations such as "average". There are some accepted practices, remove it from consideration, run the calculation both ways and show the difference to let the audience decide or include a footnote at a minimum.

That first pass did not match the narrative, which resulted in a further investigation. The source for the published information was the police RMS (records management system). This source has significant PII (personal identifying information), and the effort to redact sensitive information can be high and time-consuming. The other source was the dispatch system, which would have less information but had many of the elements needed for initial analysis.

We requested two years of dispatch data: 2022 and 2023. This was a balance of having enough data to analyze and compare without the burden of gathering the data being too high.

From the dispatch system, we now had (plus a few more data points):

- Incident number

- Date & Time

- Cross Street

- Zip code

- Activity category

- Police Department responding

Matching up the information was straightforward:

- "Incident number" equaled "Calls for Service." That was clarified as a citizen, or a police officer could generate an incident. There was no way to break this down. While we thought it might be vital as we progressed, that subtlety was not relevant and could be roughly correlated to the activity category.

- The activity category was consistent with the RMS.

- Time stamps allowed correlation with public noticeable incident reports for deeper dives into specific activities.

Having a common data definition was critical in establishing credibility in the analysis. "Calls for service" were first thought to be just citizens calling 911, but when it was learned that officers could also initiate a "call for service," the overall information made more sense.

We now had over 5,000 records to dive into, and immediately, deeper insights began to emerge. To understand those insights, we needed more data.

Step 2: Building the data set

“Data will talk to you, if you’re willing to listen to it” Jim Bergeson

The data was not ready for an in-depth conversation.

The best analogy I can think of when getting data ready to tell a story is what I imagine great authors do when profiling their characters. Each has so many attributes and backstories that make them who they are, but they may never appear in the novel.

To develop our data further, we needed to link where the activity took place and what municipality it was located in. GIS (Geographic Information System) to the rescue. GIS is a large topic, but for our needs, we needed to put a pin in the street map and elaborate on its location.

We were able to leverage cross-street information and zip code together to do just that. Using an addressing service, we were able to get latitude and longitude coordinates for each incident and then overlay census maps to evaluate what municipality that incident occurred in.

The need for coordinates was foundational, but unlocking the correct additional dataset to get the municipality was where domain knowledge and specialty tooling paid dividends.

It was time to talk. Our first conversation was plotting all the incidents on a street map by year. The results were eye opening...

Step 3: Quality control

"The temptation to form premature theories upon insufficient data is the bane of our profession" - Sherlock Holmes

We were getting close, and visualizing the data showed a need for a row-by-row quality review. Multiple pins showed up over 100 miles away from the municipality we were analyzing. We started our focus there and uncovered some zip codes with numbers transposed.

We still had the original data and just extended the information. Because of this we were able to review and compare outliers easily. Other data elements were self-correcting cross streets where they were listed as brother street a/street b and street b/street a resulted in the same coordinates.

Quality control processes in data require constant, repetitive assessments. Perfect data, like perfect testing, comes at too high a cost, and the insights gained from perfect data are directionally significant at a much lower cost in both time and effort. Given the data elements and errors in the source data, we had to loop through a review cycle six times. Each time we identified the root causes of the data errors we were able to automate corrections that reduced each subsequent run.

Our data is not error free, but after review we have great confidence that those errors are not materially significant.

Step 4: Presentation of Insight

“The greatest value of a picture is when it forces us to notice what we never expected to see.” - John Tukey

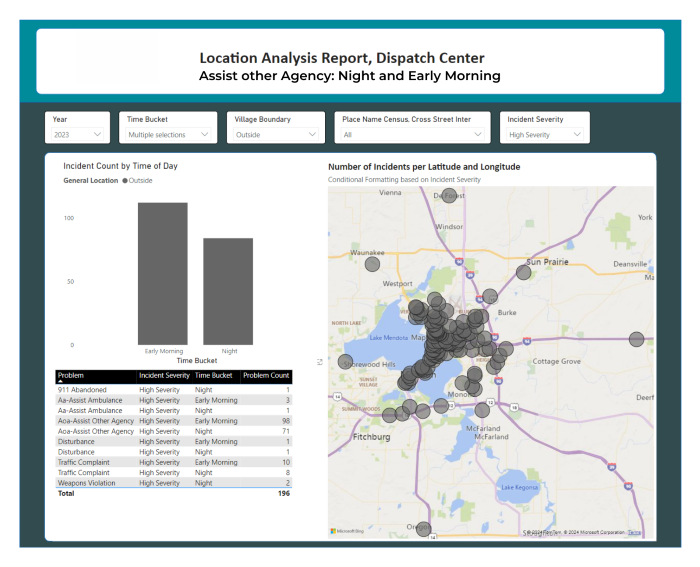

It was not just one story. The data showed multiple stories. Some are related, others highlight policy, and others are coincidental. Digging down, the police were spending a considerable amount of time assisting other agencies as far as 20 miles away. This allowed us to ask better questions and get to the root causes of why. When we presented our map plot it sank in.

The second was uncovering what caused the major increase in "calls for service". After reviewing the new analysis, the insight we found is that the single biggest jump year on year was in the "Check Property" category. A pro-active service that the police offered to residents, that had only started to be recorded in the latter part of 2022 and fully in 2023. This activity was considered minimal risk and often performed in 15 minutes or less. This insight along with a communication increase between police agencies explained that calls for service had not jumped by 38% but was 20% and if you factor out the COVID year’s 15%. Add that in 2021 a budget increase added one police officer to the staff, the increase of less than one additional "call for service" per day doesn't seem as grim as the original statement description.

Step 5: Lessons Learned

Data, turned into information and insight is a powerful tool. It can highlight an unknown root cause, identify patterns of activity, and expose gaps. It should be understandable to all; it can remove fear, uncertainty, and doubt – it can bring people to a common ground.

“The single biggest threat to the credibility of a presentation is cherry-picked data.” – Edward Tufte

Before presenting – one must understand the why behind the changes in data. The jump in calls for service was not due to a rampant crime spree – after just a little digging the increase in calls reflected a change in late 2022 to how the police department recorded its work. They started to include a proactive service of checking property, changing the definition of the underlying data. This could have been addressed with strong data governance.

Be sure that you are not building a house of cards. The knock-on assumed correlations were now on shaky ground. Further analysis showed multiple arrests were in other jurisdictions, had multiple arrests per interaction and were focused on one activity “traffic-stops”. Many of these occurred outside the community in question.

Jumping around units of measure and groupings adds to confusion. Using plain language is also important - “calls for service” sounds like someone dialing 911 and asking for help and does not indicate the police themselves creating these incidents. The original article quoted 3,163 “calls for service”, that number sounds substantial or broken down you could look at it another way as 8 calls per day or approximately 1 incident every 3 hours.

The right visual for the right information. Tables are easy, but getting information in a context that people understand and relate to is vital when sharing a story.

Being able to drill into information and answer new questions quickly was great. People could ask and answer their own questions. It became less about how the math was done and more about what the information was saying.

Policing is a difficult job – there is no doubt about that. Also difficult is balancing budgets, ensuring public trust, and allocating scare resources. An accurate data picture can support municipalities in understanding where resources are allocated and how their workload is broken down. It can also build community trust.

By: Patrick Grant, Director of Public Sector Sales

.png)

.png)

.png)

.png)